Back to blog

Making Smart Contract Event Data More Accessible With IPFS

Smart contracts emit events that contain critical information users need to interact with protocols. However, accessing this historical data presents several challenges:

- RPC Limitations: Free-tier RPC providers like Alchemy often limit how many events you can query

- Data Availability: Users need reliable access to complete event history to use many protocols

- Query Performance: Searching through event history can be slow without proper indexing

- Cost Efficiency: Storing and accessing large datasets becomes expensive at scale

As blockchain applications grow in complexity, these challenges become more significant. Fortunately, you can use IPFS to store, index, and serve event data using Pinata's existing APIs and Dedicated IPFS Gateways.

The IPFS Event Storage Pattern

By combining Pinata's storage capabilities with its metadata features, we can create a powerful, queryable event data repository that's:

- Reliable: Data persists on IPFS through Pinata's pinning service

- Performant: Structured for efficient querying through Pinata's metadata system

- Cost-effective: More economical than using premium RPC services

- Decentralized: Less reliant on centralized API providers

Let's see how to implement this solution step by step.

Example Implementation

Step 1: Set Up Your Environment

We’re going to use Bun as our runtime, so be sure to install Bun if you haven’t yet. We’re using Bun so we can take advantage of the built-in Typescript support and performance benefits.

We’ll first need to create a directory and change into it:

mkdir event-storage && cd event-storage

In your terminal, let’s initialize the project with Bun by running this command:

bun init -y

This will set up all the Typescript scaffolding and include a starter file inside the src directory. Let’s open the index.ts file inside that directory. Replace it all with:

import { PinataSDK } from 'pinata';

import { ethers } from 'ethers';

const pinata = new PinataSDK({

pinataJwt: process.env.PINATA_JWT,

gatewayUrl: process.env.PINATA_GATEWAY_URL

});

const provider = new ethers.providers.JsonRpcProvider(process.env.ALCHEMY_RPC_URL);

Now, let’s install the necessary dependencies:

npm install pinata ether

Make sure to create a .env file with your credentials:

PINATA_JWT=your_pinata_jwt_token

PINATA_GATEWAY_URL=pinata_gateway_url

ALCHEMY_RPC_URL=your_alchemy_endpoint

Now, let’s get those credentials. If you haven’t done so, you’ll need to sign up for a free account on Pinata and Alchemy.

When you’ve signed up for Pinata, you’ll need to generate an API key and grab the JWT. Here’s a guide to help you do so. Next, you’ll want to go to the Gateways page and copy your Gateway URL.

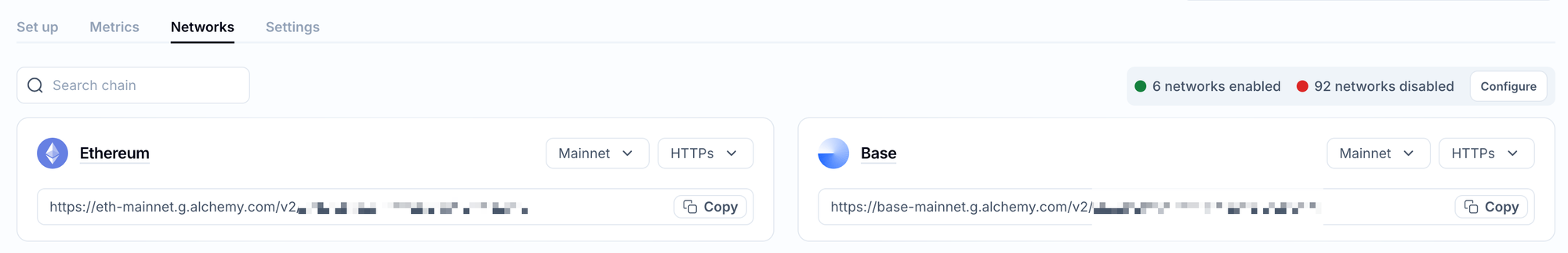

When you’ve signed up for Alchemy, you’ll need to create a project, and then you will want to click on the Networks tab and copy the URL of whichever network you’re interested in.

Enter these credentials into their spots in the .env file.

Step 2: Create a Contract Interface

Now, let's create a simple interface to the contract whose events we want to track:

// Set up contract interface

const contractAddress = '0xYourContractAddress';

const contractABI = [

// Your contract ABI here, focusing on the events

"event Transfer(address indexed from, address indexed to, uint256 value)",

// Add other events as needed

];

const contract = new ethers.Contract(contractAddress, contractABI, provider);

If you don’t already have a contract, you can follow one of our past guides to create and deploy a contract that has event transmissions included. You’ll want to use your contract address and the contract’s ABI in the above code snippet.

Step 3: Fetch Historical Event Data

Let's fetch historical events from your contract using the RPC provider:

async function fetchHistoricalEvents(fromBlock: number, toBlock: number, batchSize = 1000) {

console.log(`Fetching events from block ${fromBlock} to ${toBlock}`);

const events = [];

for (let i = fromBlock; i <= toBlock; i += batchSize) {

const currentBatchEnd = Math.min(i + batchSize - 1, toBlock);

try {

console.log(`Querying batch from ${i} to ${currentBatchEnd}`);

const batchEvents = await contract.queryFilter(

contract.filters.Transfer(),

i,

currentBatchEnd

);

const formattedEvents = batchEvents.map(event => ({

blockNumber: event.blockNumber,

transactionHash: event.transactionHash,

logIndex: event.logIndex,

// Format event-specific data

from: event.args.from,

to: event.args.to,

value: event.args.value.toString(),

// Add a timestamp

timestamp: new Date().toISOString()

}));

events.push(...formattedEvents);

console.log(`Retrieved ${formattedEvents.length} events in this batch`);

} catch (error) {

console.error(`Error fetching batch ${i}-${currentBatchEnd}:`, error);

}

// Add a small delay to avoid rate limiting

await new Promise(resolve => setTimeout(resolve, 500));

}

return events;

}

Step 4: Store Events on Pinata with Queryable Metadata

Now let's store these events on Pinata. We'll use a combination of:

- Storing the raw event data as JSON files

- Adding rich metadata to make them queryable

async function storeEventsOnPinata(events, batchSize = 100) {

console.log(`Storing ${events.length} events on Pinata`);

const results = [];

for (let i = 0; i < events.length; i += batchSize) {

const batch = events.slice(i, i + batchSize);

// Create a unique name for this batch

const batchName = `events_${batch[0].blockNumber}_to_${batch[batch.length-1].blockNumber}`;

try {

const uploadResponse = await pinata.upload.public.json(batch)

.name(batchName)

.keyvalues({

contractAddress: contractAddress,

eventType: 'Transfer', // Replace with your event type

startBlock: batch[0].blockNumber.toString(),

endBlock: batch[batch.length-1].blockNumber.toString(),

count: batch.length.toString()

});

console.log(`Uploaded batch ${batchName} with CID: ${uploadResponse.cid}`);

results.push(uploadResponse);

} catch (error) {

console.error(`Error uploading batch ${batchName}:`, error);

}

}

return results;

}

Notice, we’re using the .json method on the Pinata SDK to upload these files. That’s a simple wrapper that takes JSON data and turns it into a file that can be stored on IPFS.

The big thing to pay attention to is the keyvalues. These are what will make our event data queryable.

Step 5: Create a Group for Your Contract's Events (Optional)

For better organization, let's create a dedicated group for all events from this contract:

async function createContractEventGroup() {

try {

// Check if group already exists

const groups = await pinata.groups.public.list().name(`Events_${contractAddress}`);

if (groups.groups && groups.groups.length > 0) {

console.log(`Group for contract ${contractAddress} already exists`);

return groups.groups[0];

}

const group = await pinata.groups.public.create({

name: `Events_${contractAddress}`,

});

console.log(`Created new group for contract ${contractAddress}: ${group.id}`);

return group;

} catch (error) {

console.error(`Error managing group for contract ${contractAddress}:`, error);

throw error;

}

}

Step 6: Add Event Batches to the Group (Optional)

Once we've created our group, let's add our event batches to it:

async function addEventsToGroup(group, uploadResults) {

try {

console.log(`Adding ${uploadResults.length} event batches to group ${group.id}`);

const fileIds = uploadResults.map(result => result.id);

await pinata.groups.public.addFiles({

groupId: group.id,

files: fileIds

});

console.log(`Successfully added all event batches to group ${group.id}`);

} catch (error) {

console.error(`Error adding events to group ${group.id}:`, error);

throw error;

}

}

Step 7: Listen for Real-Time Events

Now let's set up a listener for new events and add them to our storage in real-time:

function listenForEvents() {

console.log(`Starting to listen for new events from contract ${contractAddress}`);

contract.on('Transfer', async (from, to, value, event) => {

const formattedEvent = {

blockNumber: event.blockNumber,

transactionHash: event.transactionHash,

logIndex: event.logIndex,

from: from,

to: to,

value: value.toString(),

timestamp: new Date().toISOString()

};

try {

const uploadResponse = await pinata.upload.public.json([formattedEvent])

.name(`event_${event.blockNumber}_${event.logIndex}`)

.keyvalues({

contractAddress: contractAddress,

eventType: 'Transfer',

blockNumber: event.blockNumber.toString(),

from: from,

to: to,

});

console.log(`Uploaded new event with CID: ${uploadResponse.cid}`);

const groups = await pinata.groups.public.list().name(`Events_${contractAddress}`);

if (groups.groups && groups.groups.length > 0) {

await pinata.groups.public.addFiles({

groupId: groups.groups[0].id,

files: [uploadResponse.id]

});

}

} catch (error) {

console.error(`Error processing new event:`, error);

}

});

console.log(`Event listener set up successfully`);

}

Step 8: Put It All Together

Now let's combine all our functions to create a complete script:

async function main() {

try {

// Create or get existing group for this contract's events

const group = await createContractEventGroup();

// Define block range for historical data

const fromBlock = 10000000; // Adjust to your needs

const toBlock = await provider.getBlockNumber();

// Fetch historical events

const events = await fetchHistoricalEvents(fromBlock, toBlock);

console.log(`Fetched ${events.length} historical events`);

// Store events on Pinata

const uploadResults = await storeEventsOnPinata(events);

// Add to group

await addEventsToGroup(group, uploadResults);

// Set up listener for new events

listenForEvents();

console.log(`Setup complete. Listening for new events...`);

} catch (error) {

console.error('Error in main process:', error);

}

}

main();

Accessing the Event Data

Once your data is stored on Pinata, users can access it in several ways:

1. Query by Metadata

Users can query the event data using Pinata's metadata filtering:

async function queryEventsByBlock(blockNumber) {

try {

// Query files containing the specific block

const files = await pinata.files.public.list()

.keyvalues({

contractAddress: contractAddress,

// Match files where blockNumber is between startBlock and endBlock

startBlock: { value: blockNumber.toString(), op: 'lte' },

endBlock: { value: blockNumber.toString(), op: 'gte' }

});

return files;

} catch (error) {

console.error(`Error querying events for block ${blockNumber}:`, error);

throw error;

}

}

async function queryEventsByFromAddress(address) {

try {

// Query files containing events with this address

const files = await pinata.files.public.list()

.keyvalues({

contractAddress: contractAddress,

from: address,

});

return files;

} catch (error) {

console.error(`Error querying events for address ${address}:`, error);

throw error;

}

}

2. Access via IPFS Gateway

Users can directly access the data returned from these queries via a Dedicated IPFS Gateway:

function getGatewayUrl(cid) {

return `https://gateway.pinata.cloud/ipfs/${cid}`;

}

3. Browse the Public Group

If you've made your group public, users can browse all event files through the Pinata interface or programmatically:

async function getAllEventsInGroup() {

try {

const groups = await pinata.groups.public.list()

.name(`Events_${contractAddress}`);

if (!groups.groups || groups.groups.length === 0) {

throw new Error(`No group found for contract ${contractAddress}`);

}

const files = await pinata.files.public.list()

.group(groups.groups[0].id);

return files;

} catch (error) {

console.error(`Error fetching all events:`, error);

throw error;

}

}

Benefits of This Approach

By implementing this pattern with IPFS and Pinata, your users gain several advantages:

- Reliability: Events are permanently stored on IPFS and pinned by Pinata

- Performance: Metadata-based querying allows for fast data retrieval

- Cost Efficiency: More economical than using premium RPC services

- Decentralization: Less reliance on centralized API providers

- Flexibility: Data can be accessed through multiple methods (API, gateway, groups)

Ready to Get Started?

Create a Pinata account to begin implementing this pattern for your smart contract events.

Our team is also available to help with custom implementations and enterprise-grade solutions for high-volume contracts.