Back to blog

How To Create AI Personas With IPFS

If you’ve been following Farcaster—the decentralized social network that gained a lot of interest in 2024 when they launched Frames—then you’re surely aware of Warpcast, the largest and most popular client. Warpcast is built by the team behind the protocol and is generally a tool designed to onboard people to the protocol. As part of that initiative, Warpcast has introduced a rewards leaderboard. Every week, the top-n (n because the number of winners has changed multiple times) gets paid just for creating engaging content.

This got me thinking about ‘what is engaging content’ and if it were possible to test your content before actually posting. Around the same time, I stumbled across this blog post on Every about creating a Hacker News tool to test posts for their potential to make the front page. Every is paywalled, so I didn’t get to dive into the technical details too much, but being the egomaniac builder that I am, I decided that I could probably build this. I sent this to our CEO, Kyle, and he signed off on the idea.

Time to start figuring out how to actually build this. Here’s what I knew I’d need:

- AI bots that could act as if they were the top Warpcast rewards recipients

- A large enough sample of these bots to ensure I could get a good rating distribution

- A rating mechanism where these bots could review the casts and provide their persona-based rating

- A storage system that kept the inputs public (the persona data)

Building the Personas

I decided 100 of the top Farcaster users from this leaderboard would be a large enough sample. So, I used the Warpcast API to find the rewards leaderboard members. This got me profile information. I was able to see the users’s bios, usernames, and display names. But I figured to build a solid persona, I’d need more.

I settled on grabbing the 5 most recent casts (Farcaster’s term for posts) from each user and combined it with the user profile information so that I had enough context to build a solid persona. But this didn’t exactly give me a one-sentence description of the user’s personality. I felt like I needed that to help with prompting later. So, I did what all good prompt ninja’s do, I used AI to generate a persona description.

Then, when I had the result from the LLM, I added the persona text to the other information about the user, created a .txt file from all of this, and uploaded it to IPFS using Pinata’s Groups and Key-Value features for organization. IPFS is important because transparency is important. The persona data should be public, and the content identifiers for the persona data are verifiable.

This is what the code looks like for that entire process:

import { PinataSDK } from 'pinata';

const pinata = new PinataSDK({

pinataJwt: c.env.PINATA_JWT,

pinataGateway: c.env.PINATA_GATEWAY,

});

const openai = new OpenAI({ apiKey: c.env.OPEN_AI_KEY });

try {

let winners: Winner[] = [];

let personas = [];

let count = 0;

let cursor = "";

while (count < 4) {

try {

const res = await fetch(

`https://api.warpcast.com/v1/creator-rewards-winner-history?cursor=${cursor}`

);

const data: RewardsResponse = await res.json();

winners = [...winners, ...data.result.history.winners];

if (data.next.cursor && data.result.history.winners.length > 0) {

cursor = data.next.cursor;

}

count = count + 1;

} catch (error) {

console.log(error);

throw error;

}

}

// get first five casts and profile for each user, store them in JSON file, save to Pinata

for (const winner of winners) {

try {

const userRes = await fetch(

`https://api.neynar.com/v2/farcaster/user/bulk?fids=${winner.fid}`,

{

headers: {

'x-api-key': `${c.env.NEYNAR_API_KEY}`,

},

}

);

const userData = await userRes.json();

const userPersona: Persona = {

name: userData.users[0].display_name,

handle: userData.users[0].username,

farcasterFid: userData.users[0].fid,

bio: userData.users[0].profile.text,

lastFiveCasts: [],

generatedPersona: "",

};

const castData = await fetch(

`https://api.neynar.com/v2/farcaster/feed/user/casts?fid=${winner.fid}`,

{

headers: {

'x-api-key': `${c.env.NEYNAR_API_KEY}`,

},

}

);

const cast = await castData.json();

const casts = cast.casts.slice(0, 5);

userPersona.lastFiveCasts = casts.map((c) => c.text);

const completion = await openai.chat.completions.create({

model: "gpt-4o-mini",

store: true,

messages: [

{

role: "user",

content: `You are an expert at creating concise, witty, and personality-packed user summaries. Below is a detailed user persona. Your task is to distill this information into a short, engaging, and character-filled one-liner that captures the essence of the person. The tone should be fun, creative, and slightly playful, similar to a bio someone might put on a social media profile.

User Persona:

${formatUserPersona(userPersona)}

Example Output:

"Mid-90s baby wishing they were a boomer."

"Tech nerd with a coffee addiction and an opinion on everything."

"Just a guy trying to turn thoughts into tweets and tweets into conversations."

"Probably overthinking this bio as we speak."

Now, generate a one-liner that best represents this user.`,

},

],

});

const output = completion.choices[0].message;

userPersona.generatedPersona = output.content || "";

personas.push(userPersona);

} catch (error) {

console.log(error);

throw error;

}

}

await pinata.upload.public

.json(personas)

.addMetadata({ name: `${Date.now()}.txt` })

.group(GROUP_ID);

As you can see, I’m using Neynar’s API to retrieve the necessary user and cast data, but you could also use a Farcaster Hub/Snapchain (Pinata offers one!)

Now, I had 100 user personas that could be spun up as AI agents ready to respond and rate proposed casts to see if they would get engagement.

Creating the Persona Bot Army

This was, of course, going to take more AI prompting, but I wanted to get creative here. I thought it would be interesting to send prompts to various LLMs and instead of creating one mega-prompt with all the user personas, I’d prompt and capture the responses individually for each Farcaster persona.

Here’s the code:

const { cast } = await c.req.json();

const personaFileList = await pinata.files.public

.list()

.group(GROUP_ID)

.limit(1);

const result = await pinata.gateways.public.get(personaFileList[0].ipfs_pin_hash);

const personas: any = result.data;

const shuffled =

personas && personas.length > 0

? personas.slice().sort(() => 0.5 - Math.random())

: [];

const first: any = await fetchPersonasIndividually(

c,

cast,

shuffled.slice(0, 50),

"cloudflare"

);

const second: any = await fetchPersonasIndividually(

c,

cast,

shuffled.slice(51, 100),

"openai"

);

const ratings = [...first, ...second];

const sum = ratings.reduce(

(acc: any, rating: any) => acc + Number((rating && rating.rating) || 0),

0

);

const avg = sum / ratings.length;

In the above example, I used Pinata’s API to fetch all of the persona data. Then, I created two batches of 50 personas since I was going to send to two different LLMs. You could create batches of any size and extend this to use any number of LLMs. I stuck with Cloudflare (since I was using a worker), OpenAI, and Anthropic (not shown here).

To really understand what’s going on, though, we need to see what’s happening in the fetchPersonasIndividually) function.

async function fetchPersonasIndividually(

c: Context,

cast: string,

personas: Persona[],

provider: string

) {

const validPersonas = personas.filter((p) => p.generatedPersona);

if (validPersonas.length === 0) return [];

// Map function to process each persona

const processPersona = async (persona: Persona) => {

if (!persona.generatedPersona) {

return null;

}

try {

let completion;

const systemPrompt = `You are ${persona.generatedPersona}, and you have been tasked with rating the proposed social media post text from the user on a scale of 1-10. Respond with your rating only. Any deviation from the rating format will be considered a failure. EXAMPLE GOOD RESPONSE: 4. EXAMPLE BAD RESPONSE: I would rate this a 4. If you respond with anything other than a number, it will be a failure and discarded.`;

switch (provider.toLowerCase()) {

case 'openai':

const openai = new OpenAI({ apiKey: c.env.OPEN_AI_KEY });

completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: cast },

],

});

break;

case 'anthropic':

const anthropic = new Anthropic({ apiKey: c.env.ANTHROPIC_KEY });

const anthropicResponse = await anthropic.messages.create({

model: "claude-3-sonnet-20240229",

system: systemPrompt,

messages: [{ role: "user", content: cast }],

max_tokens: 10,

});

completion = { choices: [{ message: { content: anthropicResponse.content[0].text } }] };

break;

case 'cloudflare':

const cloudflareResponse = await c.env.AI.run('@cf/meta/llama-3-8b-instruct', {

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: cast },

],

});

completion = { choices: [{ message: { content: cloudflareResponse.response } }] };

break;

default:

throw new Error(`Unsupported provider: ${provider}`);

}

if (

typeof completion.choices[0]?.message?.content === "string" &&

/^\\d+$/.test(completion.choices[0]?.message?.content)

) {

return {

user: {

name: persona.name,

handle: persona.handle,

bio: persona.bio,

fid: persona.farcasterFid,

persona: persona.generatedPersona,

},

rating: completion.choices[0]?.message?.content || "No response",

};

} else {

console.log("not a number");

console.log(completion.choices[0]?.message?.content);

return null;

}

} catch (error) {

console.error("Error processing persona:", persona.name, error);

return null;

}

};

// Uses p-map to limit concurrency

const results = await pMap(personas, processPersona, { concurrency: 10 });

return results.filter(Boolean);

}

This code runs the same prompt—which is the combination of the instructions, the proposed cast text, and the AI persona to embody—against whatever the selected AI provider is. To keep things fast, it uses p-map to concurrently process requests while also ensuring it doesn’t overload resources or blow through rate limits.

With this function, we are literally spinning up 100 AI instances of the personas generated earlier with the sole goal of providing a rating for the proposed cast. With the responses, the user who wants to create a cast can decide if their proposed cast is strong enough or if it needs to be tweaked.

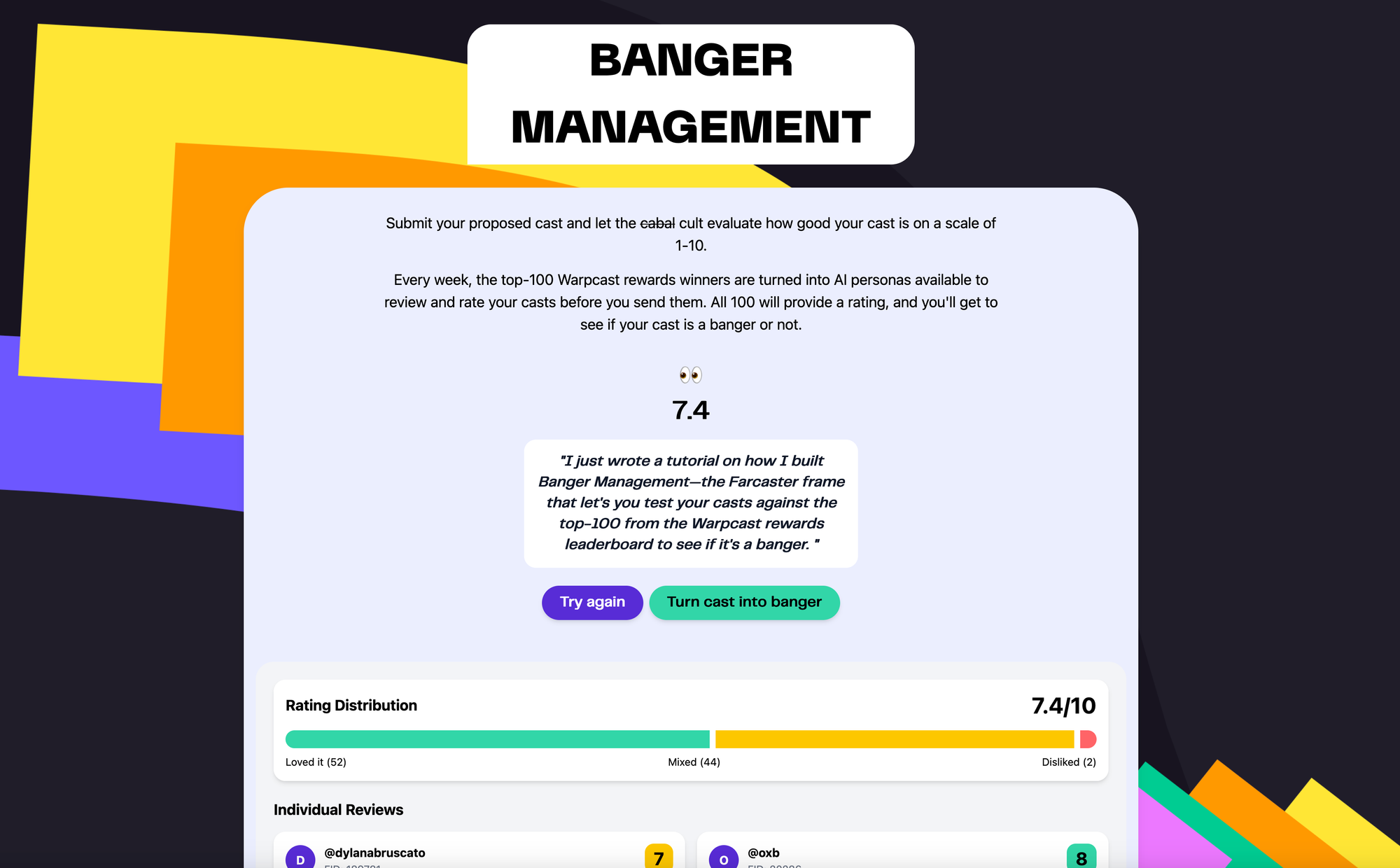

So, how does it look when fully implemented? Well, I tested a cast I would like write about this blog post.

With a rating like that, I’m probably going to post it as is! If you want to try it for yourself, you can do so here. Though, it’s best experienced in the Farcaster feed.

Conclusion

LLMs are especially good at ideating and having conversations that help you think. In programming (and maybe elsewhere), this is called rubber-ducking. Sometimes you just need to talk to a rubber duck and the solution comes to you. With AI, the duck can talk back.

This entire concept can be extended to so many viable use cases. Are you a novelist and want you test your query letter? Use this, but with personas built from literary agents. Writing a school paper, do it but with personas generated from professors.

The beautiful thing is the original content is generated by the person, and AI is only used for reinforcement and helping improve but based on real-world persona data.

Want to build your own? Get started on Pinata for free today!