Back to blog

Building IPDB: A Decentralized Database using Base, IPFS, and PGlite

As Web3 technology has progressed, we've seen it replace most computing paradigms with decentralized solutions. However the one exception has been traditional databases. Trying to use EVM smart contracts to store tables ends up being inefficient due to gas and compute costs, and solutions like IPFS don’t work since all files are static. However, new database libraries, like PGLite, could change the discourse and invent a whole new way to manage local and distributed remote databases.

In this post, we’ll go over a rough proposal, which I’m calling “IPDB” or “InterPlanetary Database.” Keep in mind that while this is not a perfect solution for every use case, it is an interesting path we could see more of in the future. Companies like ElectricSQL who are developing PGlite and pushing a model where databases are local first and then sync to a remote database through a sync engine. If you take this model and find a way to create a distributed sync engine, then you have a really unique solution for the Web3 ecosystem!

Concept

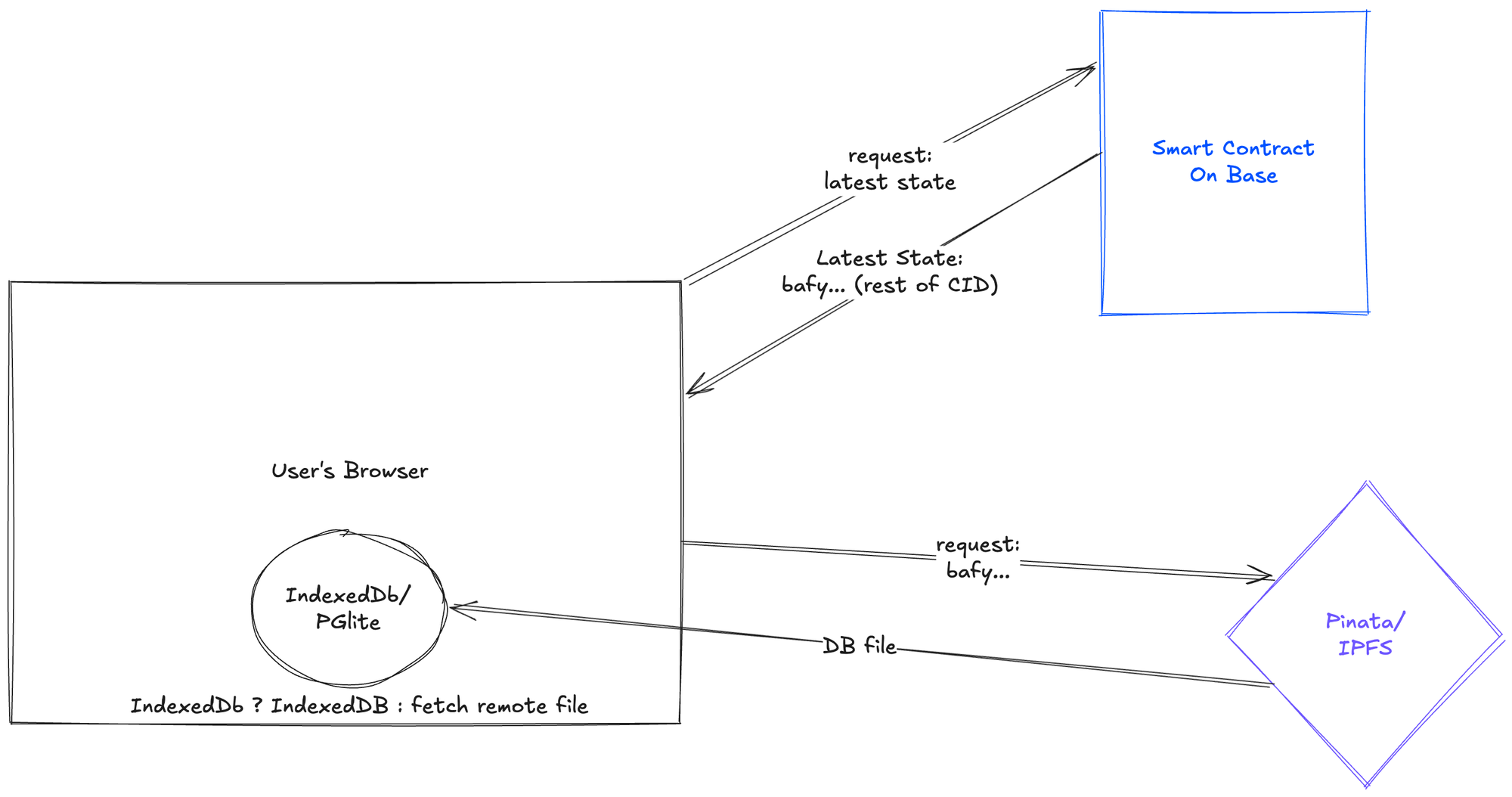

The main idea behind IPDB is go local first and then have a backup system with IPFS and smart contracts on Base.

This flow would involve several steps. First, you would need to create an initial database with your defined tables, dump the database directory into a tarball file, upload that file to IPFS via Pinata, then use a custom smart contract to track the IPFS hash as sort of an index. Once initiated, you can have a client app that will first check if there is a IndexedDB file in the browser. If not, it will make a request to the smart contract for the latest index hash, then use that hash to make a request to IPFS for the file. Once the file is downloaded, you can save it to IndexedDB and use it as the database in browser.

At any point, you can initiate a backup and restore kind of sync, where you can upload an updated database file to IPFS, get a new hash (since all IPFS CIDs are determined by the content), then update the smart contract index with the new hash. You can equally do the opposite where you clear the in-browser IndexedDB to get the most recent database file. In the end, you get a local first database that is incredibly snappy, lightweight, and portable. If you wanted to make it private, then you could try encrypting and decrypting the file or use a fully private system like Pinata’s Files API. Let’s go over some of the details of how we implemented this into a simple to-do app.

Smart Contract

The beauty of this model is that you can make it as simple or as complicated as you want. For this to-do app, we really one need the following:

- A state variable in the form of a string

- A method to update the state

- A method to get the latest state

- Bonus: An event to emit when the state is updated

- Ownable restrictions

With those requirements, we have one really simple contract that only the deployer can update.

// SPDX-License-Identifier: MIT

// Compatible with OpenZeppelin Contracts ^5.0.0

pragma solidity ^0.8.20;

import "@openzeppelin/contracts/access/Ownable.sol";

contract IPDB is Ownable {

string private dbState;

event dbUpdated(string newCid);

constructor() Ownable(msg.sender) {}

function update(string memory cid) public onlyOwner {

dbState = cid;

emit dbUpdated(cid);

}

function getState() public view returns (string memory) {

return dbState;

}

}

For our IPDB app, I just deployed this smart contract on Base, copied down the address, and then saved the ABI to be used in our client app. Thanks to the speed and cost effectiveness of Base, we can really utilize this contract to handle our index of state.

Client

We won’t go too deep into the framework and setup, but in summary, for the app I decided to use Astro with a React integration, but you could really use any framework you want as long as it has a way to have secured API routes / server rendered code to keep API keys secure. We’re also going with a local private key method to write to our smart contract, but you could change this to be through a user’s wallet instead, if you wanted to. I’m also not going to do a step by step tutorial, but the source code can be found here if you want to follow along!

One of the first things we’re going to make is the script to initialize the database.

import { pinata, publicClient, walletClient } from "@/utils/config";

import { account } from "@/utils/account";

import { abi } from "@/utils/contract.json";

import { PGlite } from "@electric-sql/pglite";

(async () => {

const db = new PGlite();

await db.exec(`

CREATE TABLE IF NOT EXISTS todo (

id SERIAL PRIMARY KEY,

task TEXT,

done BOOLEAN DEFAULT false

);

`);

const file = (await db.dumpDataDir("auto")) as File;

const upload = await pinata.upload.file(file);

const { request: contractRequest } = await publicClient.simulateContract({

account,

address: import.meta.env.PUBLIC_CONTRACT_ADDRESS,

abi: abi,

functionName: "update",

args: [`${upload.IpfsHash}`],

});

const tx = await walletClient.writeContract(contractRequest);

console.log(tx);

})();

In this script, we create our initial database with PGlite as well as create our to-do table, which just has a simple id, task, and done status. Then we dump that database directory as a tarball file, upload it using Pinata to IPFS, then we make a smart contract function call to update the state using our IPFS hash from the upload. Once this is done we’ll have a starting point for the app to use.

Next, we’ll have our really big React component which really handles all of our functionality.

import { PGlite } from "@electric-sql/pglite";

import { Button } from "./ui/button";

import { useState, useEffect } from "react";

import { Input } from "./ui/input";

import { Checkbox } from "./ui/checkbox";

import { publicClient, pinata } from "@/utils/config";

import { abi } from "@/utils/contract.json";

import { checkDatabaseExists } from "@/utils/db";

import { ReloadIcon } from "@radix-ui/react-icons";

import { useToast } from "@/hooks/use-toast";

let db: PGlite | undefined;

interface ToDo {

id: number;

task: string;

done: boolean;

}

export default function Database() {

const [todos, setTodos] = useState<ToDo[]>([]);

const [taskName, setTaskName] = useState("");

const [loading, setLoading] = useState(true);

const [saving, setSaving] = useState(false);

const { toast } = useToast();

async function importDb() {

try {

setLoading(true);

const dbCid = await publicClient.readContract({

address: import.meta.env.PUBLIC_CONTRACT_ADDRESS,

abi: abi,

functionName: "getState",

});

console.log(dbCid);

const dbExists = await checkDatabaseExists("todo-db");

if (!dbExists) {

const dbFile = await pinata.gateways.get(dbCid as unknown as string);

const file = dbFile.data as Blob;

db = new PGlite({

loadDataDir: file,

dataDir: "idb://todo-db",

});

console.log("used remote db");

} else {

db = new PGlite({

dataDir: "idb://todo-db",

});

console.log("used local db");

}

const ret = await db?.query(`

SELECT * from todo ORDER BY id ASC;

`);

setTodos(ret?.rows as ToDo[]);

toast({

title: "Database Restored",

});

setLoading(false);

} catch (error) {

setLoading(false);

console.log(error);

}

}

async function addTodo() {

try {

await db?.query("INSERT INTO todo (task, done) VALUES ($1, false)", [

taskName,

]);

const ret = await db?.query(`

SELECT * from todo;

`);

setTodos(ret?.rows as ToDo[]);

setTaskName("");

console.log(ret?.rows);

} catch (error) {

console.log(error);

}

}

async function updateTodo(id: number, done: boolean) {

try {

await db?.query("UPDATE todo SET done = $1 WHERE id = $2", [done, id]);

const ret = await db?.query("SELECT * from todo ORDER BY ID ASC;");

setTodos(ret?.rows as ToDo[]);

} catch (error) {

console.log(error);

}

}

async function deleteTodo(id: number) {

try {

await db?.query("DELETE FROM todo WHERE id = $1", [id]);

const ret = await db?.query("SELECT * from todo ORDER BY ID ASC;");

setTodos(ret?.rows as ToDo[]);

} catch (error) {

console.log(error);

}

}

async function saveDb() {

try {

setSaving(true);

const dbFile = await db?.dumpDataDir("auto");

if (!dbFile) {

throw new Error("Failed to dump database");

}

const data = new FormData();

data.append("file", dbFile);

const req = await fetch("/api/upload", {

method: "POST",

body: data,

});

const res = await req.json();

console.log(res);

toast({

title: "Database Saved",

});

setSaving(false);

} catch (error) {

setSaving(false);

console.log(error);

}

}

function taskNameHandle(e: React.ChangeEvent<HTMLInputElement>) {

setTaskName(e.target.value);

}

useEffect(() => {

importDb();

}, []);

return (

<div className="flex flex-col gap-2">

{loading ? (

<ReloadIcon className="h-12 w-12 animate-spin" />

) : (

<>

<div className="flex flex-row items-center gap-4">

<Input value={taskName} onChange={taskNameHandle} type="text" />

<Button onClick={addTodo}>Add Todo</Button>

</div>

<div className="flex flex-col gap-2 items-start">

{todos ? (

todos.map((item: ToDo) => (

<div

className="w-full flex items-center justify-between gap-2"

key={item.id}

>

<div className="flex items-center gap-2">

<Checkbox

onCheckedChange={(checked) =>

updateTodo(item.id, checked as boolean)

}

checked={item.done}

/>

<p className={item.done ? "line-through" : ""}>

{item.task}

</p>

</div>

<Button

size="icon"

variant="destructive"

onClick={() => deleteTodo(item.id)}

>

X

</Button>

</div>

))

) : (

<p>No todos yet</p>

)}

</div>

<div className="w-full">

{saving ? (

<Button className="w-full" disabled>

<ReloadIcon className="mr-2 h-4 w-4 animate-spin" />

Saving...

</Button>

) : (

<Button className="w-full" onClick={saveDb}>

Save

</Button>

)}

</div>

</>

)}

</div>

);

}

Admittedly, this is a gnarly piece of code with a lot going on, but we’ll go piece by piece to explain what’s happening and how it’s working. The first function we’ll want to look closer at is our importDb() function that runs once the app is started via useEffect.

async function importDb() {

try {

setLoading(true);

const dbCid = await publicClient.readContract({

address: import.meta.env.PUBLIC_CONTRACT_ADDRESS,

abi: abi,

functionName: "getState",

});

console.log(dbCid);

const dbExists = await checkDatabaseExists("todo-db");

if (!dbExists) {

const dbFile = await pinata.gateways.get(dbCid as unknown as string);

const file = dbFile.data as Blob;

db = new PGlite({

loadDataDir: file,

dataDir: "idb://todo-db",

});

console.log("used remote db");

} else {

db = new PGlite({

dataDir: "idb://todo-db",

});

console.log("used local db");

}

const ret = await db?.query(`

SELECT * from todo ORDER BY id ASC;

`);

setTodos(ret?.rows as ToDo[]);

toast({

title: "Database Restored",

});

setLoading(false);

} catch (error) {

setLoading(false);

console.log(error);

}

}

The first thing we do is get the initial state from our smart contract in the form of a CID or IPFS Hash. Then we run a helper function to check if there is an IndexedDB instance with the name “todo-db”. This prevents downloading the DB every single time, but in the case that there is no IndexedDB then we can download the file using Pinata from IPFS, parse it, then feed it into the PGlite instance. What’s really cool here is that not only will it load the tarball file of our DB into memory, but it will also persist it to idb (IndexedDB) making it local, that way if we ran this check again, we’ll just use that local instance instead. Once the database is loaded, we make a simple query to fetch all of our todos and put them into some React state.

When it comes to updating our to-do’s we can just follow a simple CRUD pattern.

async function addTodo() {

try {

await db?.query("INSERT INTO todo (task, done) VALUES ($1, false)", [

taskName,

]);

const ret = await db?.query(`

SELECT * from todo;

`);

setTodos(ret?.rows as ToDo[]);

setTaskName("");

console.log(ret?.rows);

} catch (error) {

console.log(error);

}

}

async function updateTodo(id: number, done: boolean) {

try {

await db?.query("UPDATE todo SET done = $1 WHERE id = $2", [done, id]);

const ret = await db?.query("SELECT * from todo ORDER BY ID ASC;");

setTodos(ret?.rows as ToDo[]);

} catch (error) {

console.log(error);

}

}

async function deleteTodo(id: number) {

try {

await db?.query("DELETE FROM todo WHERE id = $1", [id]);

const ret = await db?.query("SELECT * from todo ORDER BY ID ASC;");

setTodos(ret?.rows as ToDo[]);

} catch (error) {

console.log(error);

}

}

Each of these are pretty self explanatory, but the piece to keep in mind is that it’s all happening with the IndexedDB; local first!

For saving the database state and writing it to the smart contract, we have the following saveDb function.

async function saveDb() {

try {

setSaving(true);

const dbFile = await db?.dumpDataDir("auto");

if (!dbFile) {

throw new Error("Failed to dump database");

}

const data = new FormData();

data.append("file", dbFile);

const req = await fetch("/api/upload", {

method: "POST",

body: data,

});

const res = await req.json();

console.log(res);

toast({

title: "Database Saved",

});

setSaving(false);

} catch (error) {

setSaving(false);

console.log(error);

}

}

This will dump the client side database directory and send it as a file to an API route where it’s uploaded.

import type { APIRoute } from "astro";

import { pinata, walletClient, publicClient } from "@/utils/config";

import { account } from "@/utils/account";

import { abi } from "@/utils/contract.json";

export const POST: APIRoute = async ({ request }) => {

const data = await request.formData();

const file = data.get("file") as File;

if (!file) {

return new Response(

JSON.stringify({

message: "Missing file",

}),

{ status: 400 },

);

}

const { IpfsHash } = await pinata.upload.file(file);

const { request: contractRequest } = await publicClient.simulateContract({

account,

address: import.meta.env.PUBLIC_CONTRACT_ADDRESS,

abi: abi,

functionName: "update",

args: [IpfsHash],

});

const tx = await walletClient.writeContract(contractRequest);

const transaction = await publicClient.waitForTransactionReceipt({

hash: tx,

});

return new Response(

JSON.stringify({

data: transaction.status,

}),

{ status: 200 },

);

};

This is almost identical to our initialization script where we taking the incoming file through formData, upload it to Pinata, get the IPFS hash, and write it to the contract. That easy! In the end we have an app that can load a remote database, keep it local, yet still backup to IPFS and smart contracts.

This whole app can be deployed yourself using this smart contract repo and the app repo here, with all the instructions for each in the README files. Would love to see what people could build with them!

Wrapping Up

Like we mentioned earlier, this concept isn’t bulletproof depending on your use case, but in our opinion, it creates some interesting possibilities. One such possibility, suggested by Sam Willis, one of the builders of PGlite, is to write a virtual file system on top of IPFS, allowing the sync of content by individual files instead of dumping the entire directory. This would allow for more frequent and faster sync methods that could be applied. On the Web3 side of things, there’s lot of flexibility in how you manage the database indexes. In our example, we have events emit when a new state is changed, so you could use that as a version history of save states. Another idea is to use a contract factory so each database can be tied to a wallet by implemented SIWE.

Overall we’re excited by the advancement of tech like PGlite and Base which really enable ideas like this. Pinata’s mission from the beginning was to make IPFS easy, and we still do that along with private file uploads, so be sure to try it out if you haven’t already! Happy Pinning!